Squad 100000+ Questions for Machine Comprehension of Text Reviews

Machine Comprehension with BERT

Utilise Deep Learning for Question Answering

The Github repository with the code can be found hither . The article will get over how to set up this up. Estimated time: under 30 minutes.

Machine (Reading) Comprehension is the field of NLP where nosotros teach machines to empathise and answer questions using unstructured text.

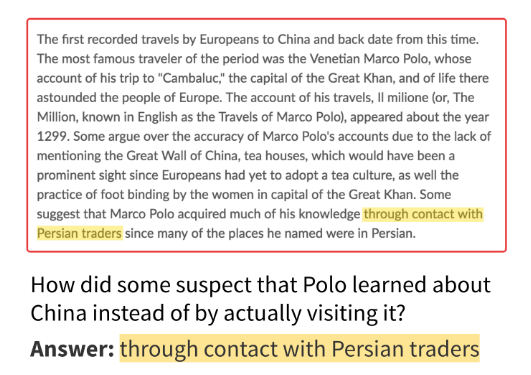

In 2016, StanfordNLP put together the Team (Stanford Question Answering Dataset) dataset which consisted of over 100,000 question answer pairs formulated from Wikipedia articles. The challenge was to railroad train a machine learning model to answer questions based on a contextual document. When provided with a contextual document (gratuitous form text) and a question, the model would return the subset of the text nigh likely to reply the question.

Top AI practitioners around the world tackled this trouble, only later on two years, no model beat the human benchmark. Withal, at the terminate of 2018, the brilliant minds at Google Brain introduced BERT (Bidirectional Encoder Representations from Transformers), a general purpose language agreement model. With some fine-tuning, this model was able to surpass the homo benchmark when compared against the SQuAD test set.

From the newspaper Squad: 100,000+ Questions for Machine Comprehension of Text, these were the metrics used for evaluation:

Verbal lucifer. This metric measures the percent of predictions that match any 1 of the ground truth answers exactly.

(Macro-averaged) F1 score. This metric measures the average overlap between the prediction and footing truth respond. We treat the prediction and footing truth as numberless of tokens, and compute their F1. Nosotros take the maximum F1 over all of the footing truth answers for a given question, and so average over all of the questions.

Based on the initial SQuAD dataset:

- Human annotators achieved an exact lucifer score of 82.304% and a F1-score of 91.221%

- The original BERT Model (which ranked 11th on the leader board primarily beaten by other variations of BERT) achieved an exact match score of 85.083% and a F1-score of 91.835%

Today, I volition show you how to c̶h̶e̶a̶t̶ ̶o̶n̶ ̶y̶o̶u̶r̶ ̶g̶r̶a̶d̶e̶ ̶3̶ ̶h̶o̶m̶e̶w̶o̶r̶k fix your ain reading comprehension arrangement using BERT. The Github repository with the lawmaking tin can be found hither .

To get started, you will need Docker. 🐳

Set up up Docker

Docker is useful for containerizing applications. We will exist using Docker to make this work more usable and the results more reproducible. Follow these instruction to install Docker on your organisation. You'll too need docker-compose, which comes natively with Docker on macos and windows. If you are using Linux, y'all can install it here.

Save the code locally from my Github Repo

All the code and necessary dependencies are in the repo with the exception of the Team data and the pre-trained weights. Note: the data is only required if y'all want to railroad train the model yourself. If not, you tin can utilize the weights I pre-trained. Another note: I wouldn't recommend preparation the model unless you have a powerful GPU or a lot of time.

If you desire to train the model yourself …

Download the Squad two.0 dataset here. Save "Preparation Set v2.0", and "Dev Fix v2.0" to bert_QA/information.

If you desire to apply pre-trained weights …

I already trained the model against the SQuAD 2.0 dataset. You tin can download them here. Unzip the file and salvage the content asbert_QA/weights.

Create the Docker container

Docker containers are piece of work environments congenital using instructions provided in the docker image. We demand a docker-etch.yaml config file to define what our container will look similar.

I fabricated a custom docker image for huggingface's Pytorch transformers which yous can find on dockerhub. For the purpose of this tutorial, you lot will not need to pull any images as the config file already does that. The config file volition as well mount our local bert_QA binder as /workspace in the container.

- Spin upwardly our container by running

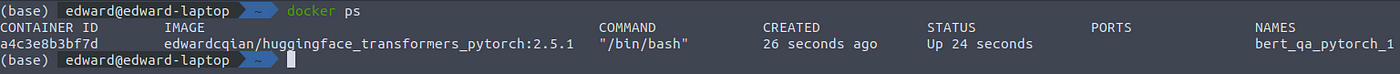

docker-compose up -din the root directory from terminal/beat. This will take several minutes the first time. - Check if our comprise is up and running using

docker ps.

- Adhere a bash beat out to the running container:

docker exec -it <your_container_name> bash

Train the Model

Skip this pace if you lot are using my pre-trained weights. We will be using the default grooming script provided by huggingface to railroad train the model.

Run this in the bash shell:

Note: if you don't have a GPU, per_gpu_train_batch_size will not do anything

This will train and save the model weights to the weights directory.

Making Inferences using the Model

Now permit'south employ the model to do some absurd stuff.

Starting time an ipython session in the shell and import the ModelInference module to load weights from weights/. Pass the contextual document every bit a parameter to mi.add_target_text(). Later the corpus has been ingested, use mi.evaluate() to enquire questions. The module will only render an answer if the model is confident the answer exists in the text. Otherwise, the model will output "No valid answers plant.".

Conclusion

NLP has gone a long mode over the past few years. Pre-trained BERT weights had a like bear upon to NLP equally AlexNet for image recognition. It has enabled numerous novel applications of natural linguistic communication understanding.

rodgerswithaturaine52.blogspot.com

Source: https://towardsdatascience.com/machine-comprehension-with-bert-6eadf16c87c1

0 Response to "Squad 100000+ Questions for Machine Comprehension of Text Reviews"

Postar um comentário